An AI drone has beaten three champion drone racers, setting a “new milestone” as the first autonomous system capable of winning against human champions at a physical sport, researchers said.

An AI system called Swift won multiple races against the trio in first-person view drone racing, where pilots fly quadcopters remotely at speeds of more than 100 kilometres per hour, according to researchers from the University of Zurich and tech company Intel.

Swift is the latest addition to artificial intelligence’s triumphs in competitions against humans, following the successes of IBM’s Deep Blue against Garry Kasparov at chess in 1996 and Google’s AlphaGo against top champion Lee Sedol at Go in 2016.

It took on 2019 Drone Racing League champion Alex Vanover, 2019 MultiGP Drone Racing champion Thomas Bitmatta and three-time Swiss champion Marvin Schaepper.

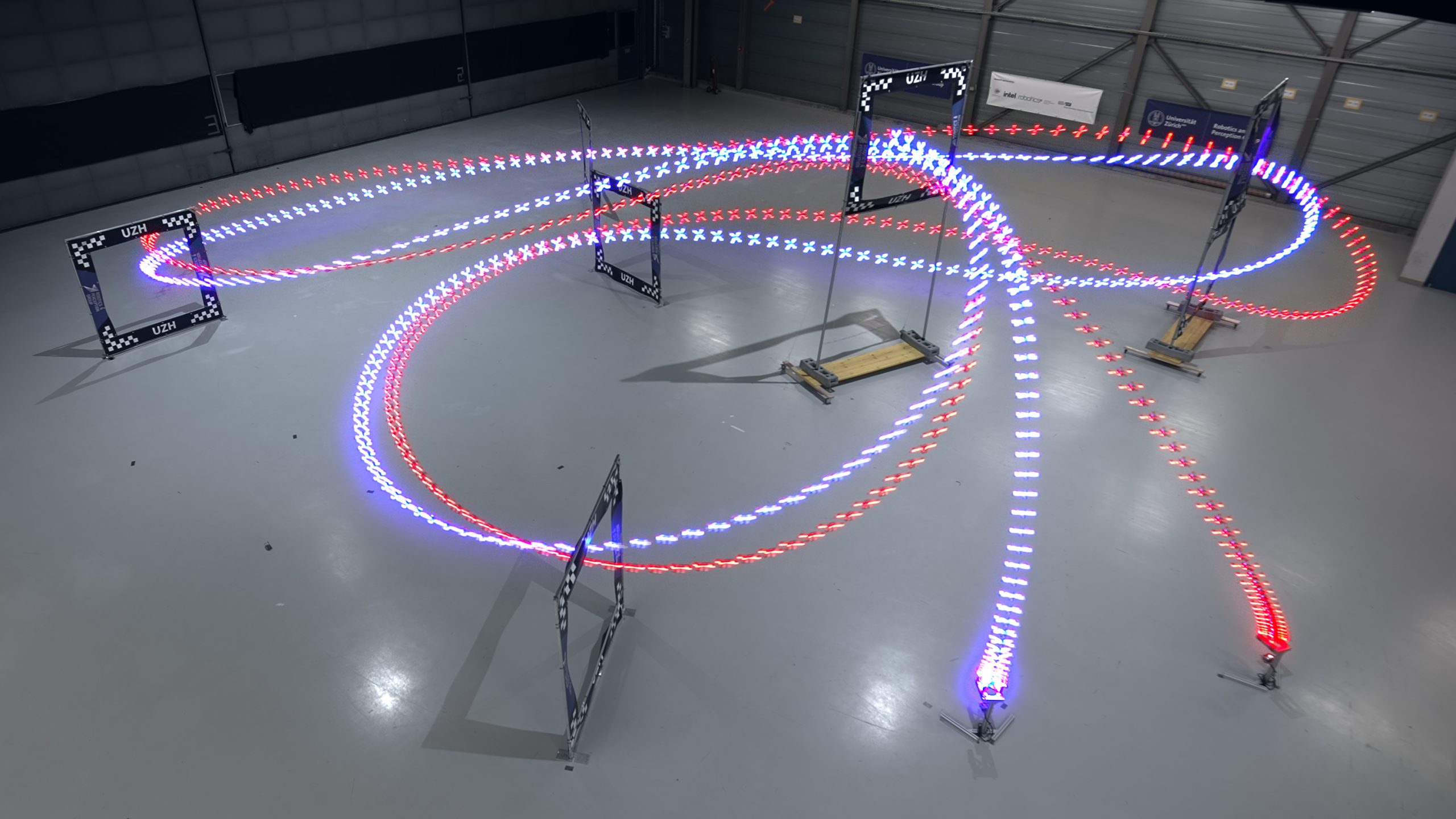

The races were held between June 5 and 13 last year on a purpose-built track, which necessitated “challenging manoeuvres” in a hangar of Dubendorf Airport, near Zurich.

The AI-powered drone achieved the fastest lap overall but human pilots were “more adaptable”, with the autonomous drone failing when conditions differed to what it was trained for.

Davide Scaramuzza, head of the Robotics and Perception Group at the Swiss university, said that flying drones faster increases their “utility”, as they have a limited battery capacity, and because flying fast is important to cover large spaces in shorter bouts of time.

He added the speed could prove useful for rescue drones entering buildings on fire, and for space exploration, forest monitoring and shooting action scenes on film sets.

Swift reacts in “real time” to data collected by a camera onboard the drone, according to the research, and it was trained in a simulated environment where it taught itself to fly by “trial and error”.

Prof Scaramuzza said: “Physical sports are more challenging for AI because they are less predictable than board or video games.

“We don’t have a perfect knowledge of the drone and environment models, so the AI needs to learn them by interacting with the physical world.”

The research was published in the Nature journal on Wednesday and is titled: Champion-Level Drone Racing using Deep Reinforcement Learning.